What is this project about?

Scope

The main goal of this project is to create a kubernetes cluster at home using ARM/x86 bare metal nodes (Raspberry Pis and low cost refurbished mini PCs) and to automate its deployment and configuration applying IaC (infrastructure as a code) and GitOps methodologies with tools like Ansible, cloud-init and Flux CD.

The project scope includes the automatic installation and configuration of a lightweight Kubernetes flavor based on K3S, and deployment of cluster basic services such as:

- Distributed block storage for POD’s persistent volumes, LongHorn.

- S3 Object storage, Minio.

- Backup/restore solution for the cluster, Velero and Restic.

- Certificate management, Cert-Manager.

- Secrets Management solution with Vault and External Secrets

- Identity Access Management(IAM) providing Single-sign On, Keycloak

- Observability platform based on:

- Metrics monitoring solution, Prometheus

- Logging and analytics solution, combined EFK+LG stacks (Elasticsearch-Fluentd/Fluentbit-Kibana + Loki-Grafana)

- Distributed tracing solution, Tempo.

Also deployment of services for building a cloud-native microservices architecture are include as part of the scope:

- Service mesh architecture, Istio

- API security with Oauth2.0 and OpenId Connect, using IAM solution, Keycloak

- Streaming platform, Kafka

Design Principles

- Use hybrid x86/ARM bare metal nodes, combining in the same cluster Raspberry PI nodes (ARM) and x86 mini PCs (HP Elitedesk 800 G3).

- Use lightweight Kubernetes distribution (K3S). Kubernetes distribution with a smaller memory footprint which is ideal for running on Raspberry PIs

- Use distributed storage block technology, instead of centralized NFS system, for pod persistent storage. Kubernetes block distributed storage solutions, like Rook/Ceph or Longhorn, in their latest versions have included ARM 64 bits support.

- Use opensource projects under the CNCF: Cloud Native Computing Foundation umbrella

- Use latest versions of each opensource project to be able to test the latest Kubernetes capabilities.

- Automate deployment of cluster using IaC (infrastructure as a code) and GitOps methodologies with tools like:

- cloud-init to automate the initial OS installation of the cluster nodes.

- Ansible for automating the configuration of the cluster nodes, installation of kubernetes and external services, and triggering cluster bootstrap (FluxCD bootstrap).

- Flux CD to automatically provision Kubernetes applications from git repository.

Technology Stack

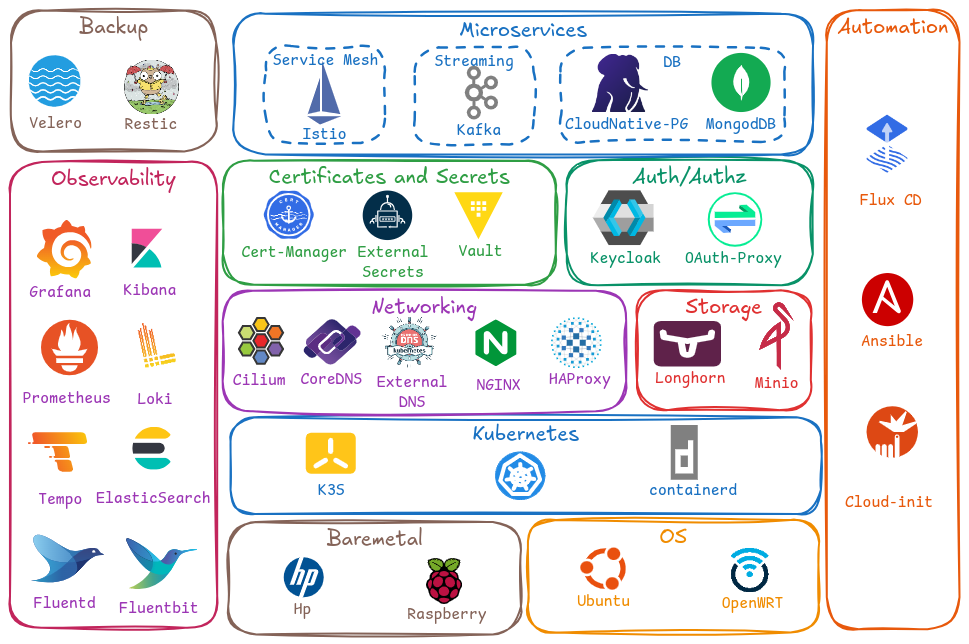

The following picture shows the set of opensource solutions used for building this cluster:

| Name | Description | |

|---|---|---|

| Ansible | Automate OS configuration, external services installation and k3s installation and bootstrapping | |

| FluxCD | GitOps tool for deploying applications to Kubernetes | |

| Cloud-init | Automate OS initial installation | |

| Ubuntu | Cluster nodes OS | |

| OpenWrt | Router/Firewall OS | |

| K3S | Lightweight distribution of Kubernetes | |

| Containerd | Container runtime integrated with K3S | |

| Cilium CNI | Kubernetes Networking (CNI) and Load Balancer | |

| CoreDNS | Kubernetes DNS | |

| External-DNS | External DNS synchronization | |

| HAProxy | Kubernetes API Load-balancer | |

| NGINX Ingress Controller | Kubernetes Ingress Controller | |

| Longhorn | Kubernetes distributed block storage | |

| Minio | S3 Object Storage solutio | |

| Cert-Manager | TLS Certificates management | |

| Hashicorp Vault | Secrets Management solution | |

| External Secrets Operator | Sync Kubernetes Secrets from Hashicorp | |

| Keycloak | Identity Access Managemen | |

| OAuth2 Proxy | OAuth2.0 Proxy | |

| Velero | Kubernetes Backup and Restore solution | |

| Restic | OS Backup and Restore solution | |

| Prometheus | Metrics monitoring and alerting | |

| Fluentd | Logs forwarding and distribution | |

| Fluent-bit | Logs collection | |

| Grafana Loki | Logs aggregation | |

| ElasticSearch | Log analytics | |

| Kibana | Logs analytics Dashboards | |

| Grafana Tempo | Distributed tracing monitoring | |

| Grafana | Monitoring Dashboards | |

| Istio | Kubernetes Service Mesh | |

| Kafka Strimzi Operator | Kubermetes Operator for running Kafka, Event Streaming and distribution | |

| CloudNative-PG | Kubernetes Operator for running PosgreSQL | |

| MongoDB Operator | [[Kubernetes Operator]] for running MongoDB |

Deprecated Technology

The following technologies have been used in previous releases of PiCluster but they have been deprecated and not longer maintained

| Name | Description | |

|---|---|---|

| Metal-LB | Load-balancer implementation for bare metal Kubernetes clusters. Replaced by Cilium CNI load balancing capabilities | |

| Traefik | Kubernetes Ingress Controller. Replaced by NGINX Ingress Controller | |

| ArgoCD | GitOps tool. Replaced by FluxCD | |

| Flannel | Kubernetes CNI plugin. Embedded into K3s. Replaced by Cilium CNI |

External Resources and Services

Even whe the premise is to deploy all services in the kubernetes cluster, there is still a need for a few external services/resources. Below is a list of external resources/services and why we need them.

Cloud external services

Note: These resources are optional, the homelab still works without them but it won’t have trusted certificates.

| Provider | Resource | Purpose | |

|---|---|---|---|

|

Letsencrypt | TLS CA Authority | Signed valid TLS certificates |

|

IONOS | DNS | DNS and DNS-01 challenge for certificates |

Alternatives:

-

Use a private PKI (custom CA to sign certificates).

Currently supported. Only minor changes are required. See details in Doc: Quick Start instructions.

-

Use other DNS provider.

Cert-manager / Certbot used to automatically obtain certificates from Let’s Encrypt can be used with other DNS providers. This will need further modifications in the way cert-manager application is deployed (new providers and/or webhooks/plugins might be required).

Currently only acme issuer (letsencytp) using IONOS as dns-01 challenge provider is configured. Check list of supported dns01 providers.

Self-hosted external services

There is another list of services that I have decided to run outside the kubernetes cluster selfhosting them.

| External Service | Resource | Purpose | |

|---|---|---|---|

| Minio | S3 Object Store | Cluster Backup | |

| Hashicorp Vault | Secrets Management | Cluster secrets management |

Minio backup servive is hosted in a VM running in Public Cloud, using Oracle Cloud Infrastructure (OCI) free tier.

Vault service is running in one of the cluster nodes, node1, since Vault kubernetes authentication method need access to Kuberentes API, I won’t host Vault service in Public Cloud.

What I have built so far

From hardware perspective I built two different versions of the cluster

- Cluster 1.0: Basic version using dedicated USB flash drive for each node and centrazalized SAN as additional storage

- Cluster 2.0: Adding dedicated SSD disk to each node of the cluster and improving a lot the overall cluster performance

- Cluster 3.0: Creating hybrid ARM/x86 kubernetes cluster, combining Raspberry PI nodes with x86 mini PCs

What I have developed so far

From software perspective, I have developed the following:

-

Cloud-init template files for initial OS installation in Raspberry PI nodes

Source code can be found in Pi Cluster Git repository under

metal/rpi/cloud-initdirectory. -

Automate initial OS installation in x86_64 nodes using PXE server and Ubuntu’s auto-install template files.

-

Ansible playbook and roles for configuring cluster nodes and installating and bootstraping K3S cluster

Source code can be found in Pi Cluster Git repository under

/ansibledirectory.Aditionally several ansible roles have been developed to automate different configuration tasks on Ubuntu-based servers that can be reused in other projects. These roles are used by Pi-Cluster Ansible Playbooks

Each ansible role source code can be found in its dedicated Github repository and is published in Ansible-Galaxy to facilitate its installation with

ansible-galaxycommand.Ansible role Description Github ricsanfre.security Automate SSH hardening configuration tasks ricsanfre.ntp Chrony NTP service configuration ricsanfre.firewall NFtables firewall configuration ricsanfre.dnsmasq Dnsmasq configuration ricsanfre.bind9 Bind9 configuration ricsanfre.storage Configure LVM ricsanfre.iscsi_target Configure iSCSI Target ricsanfre.iscsi_initiator Configure iSCSI Initiator ricsanfre.k8s_cli Install kubectl and Helm utilities ricsanfre.fluentbit Configure fluentbit ricsanfre.minio Configure Minio S3 server ricsanfre.backup Configure Restic ricsanfre.vault Configure Hashicorp Vault -

Packaged Kuberentes applications (Helm, Kustomize, manifest files) to be deployed using FluxCD

Source code can be found in Pi Cluster Git repository under

/kubernetesdirectory. -

This documentation website, picluster.ricsanfre.com, hosted in Github pages.

Static website generated with Jekyll.

Source code can be found in the Pi-cluster repository under

/docsdirectory.

Software used and latest version tested

The software used and the latest version tested of each component

| Type | Software | Latest Version tested | Notes |

|---|---|---|---|

| OS | Ubuntu | 24.04.3 | |

| Control | Ansible | 2.18.6 | |

| Control | cloud-init | 23.1.2 | version pre-integrated into Ubuntu 22.04.2 |

| Kubernetes | K3S | v1.35.0 | K3S version |

| Kubernetes | Helm | v3.17.3 | |

| Kubernetes | etcd | v3.6.6-k3s1 | version pre-integrated into K3S |

| Computing | containerd | v2.1.5-k3s1 | version pre-integrated into K3S |

| Networking | Cilium | 1.18.6 | |

| Networking | CoreDNS | v1.13.1 | Helm chart version: 1.45.2 |

| Networking | External-DNS | 0.20.0 | Helm chart version: 1.20.0 |

| Metric Server | Kubernetes Metrics Server | v0.8.0 | Helm chart version: 3.13.0 |

| Service Mesh | Istio | v1.28.3 | Helm chart version: 1.28.3 |

| Service Proxy | Ingress NGINX | v1.14.2 | Helm chart version: 4.14.2 |

| Storage | Longhorn | v1.10.1 | Helm chart version: 1.10.1 |

| Storage | Minio | RELEASE.2024-12-18T13-15-44Z | Helm chart version: 5.4.0 |

| TLS Certificates | Certmanager | v1.19.2 | Helm chart version: v1.19.2 |

| Logging | ECK Operator | 3.1.0 | Helm chart version: 3.1.0 |

| Logging | Elastic Search | 8.19.10 | Deployed with ECK Operator |

| Logging | Kibana | 8.19.10 | Deployed with ECK Operator |

| Logging | Fluentbit | 4.2.2 | Helm chart version: 0.55.0 |

| Logging | Fluentd | 1.17.1 | Helm chart version: 0.5.3 Custom docker image from official v1.17.1 |

| Logging | Loki | 3.6.3 | Helm chart grafana/loki version: 6.51.0 |

| Monitoring | Kube Prometheus Stack | v81.4.2 | Helm chart version: 81.4.2 |

| Monitoring | Prometheus Operator | v0.88.1 | Installed by Kube Prometheus Stack. Helm chart version: 81.4.2 |

| Monitoring | Prometheus | v3.9.1 | Installed by Kube Prometheus Stack. Helm chart version: 81.4.2 |

| Monitoring | AlertManager | v0.30.1 | Installed by Kube Prometheus Stack. Helm chart version: 81.4.2 |

| Monitoring | Prometheus Node Exporter | v1.10.2 | Installed as dependency of Kube Prometheus Stack chart. Helm chart version: 81.4.2 |

| Monitoring | Kube State Metrics | 2.18.0 | Installed as dependency of Kube Prometheus Stack chart. Helm chart version: 81.4.2 |

| Monitoring | Prometheus Elasticsearch Exporter | 1.10.0 | Helm chart version: prometheus-elasticsearch-exporter-7.2.1 |

| Monitoring | Grafana | 12.3.1 | Helm chart version: 10.5.15 |

| Tracing | Grafana Tempo | 2.9.0 | Helm chart: tempo-distributed (v1.61.3) |

| Backup | Minio External (self-hosted) | RELEASE.2025-10-15T17-29-55Z | |

| Backup | Restic | 0.18.0 | |

| Backup | Velero | 1.17.1 | Helm chart version: 11.3.2 |

| Secrets Management | Hashicorp Vault | 1.21.2 | |

| Secrets Management | External Secret Operator | 1.3.1 | Helm chart version: 1.3.1 |

| Identity Access Management | Keycloak | 26.5.2 | Keycloak Operator |

| Identity Access Management | Oauth2.0 Proxy | 7.13.0 | Helm chart version: 10.1.2 |

| GitOps | Flux CD | v2.7.3 | |

| GitOps | Flux Tofu Controller | 0.16.0 |